NHR4CES Community Workshop 2023

and RWTH Aachen University

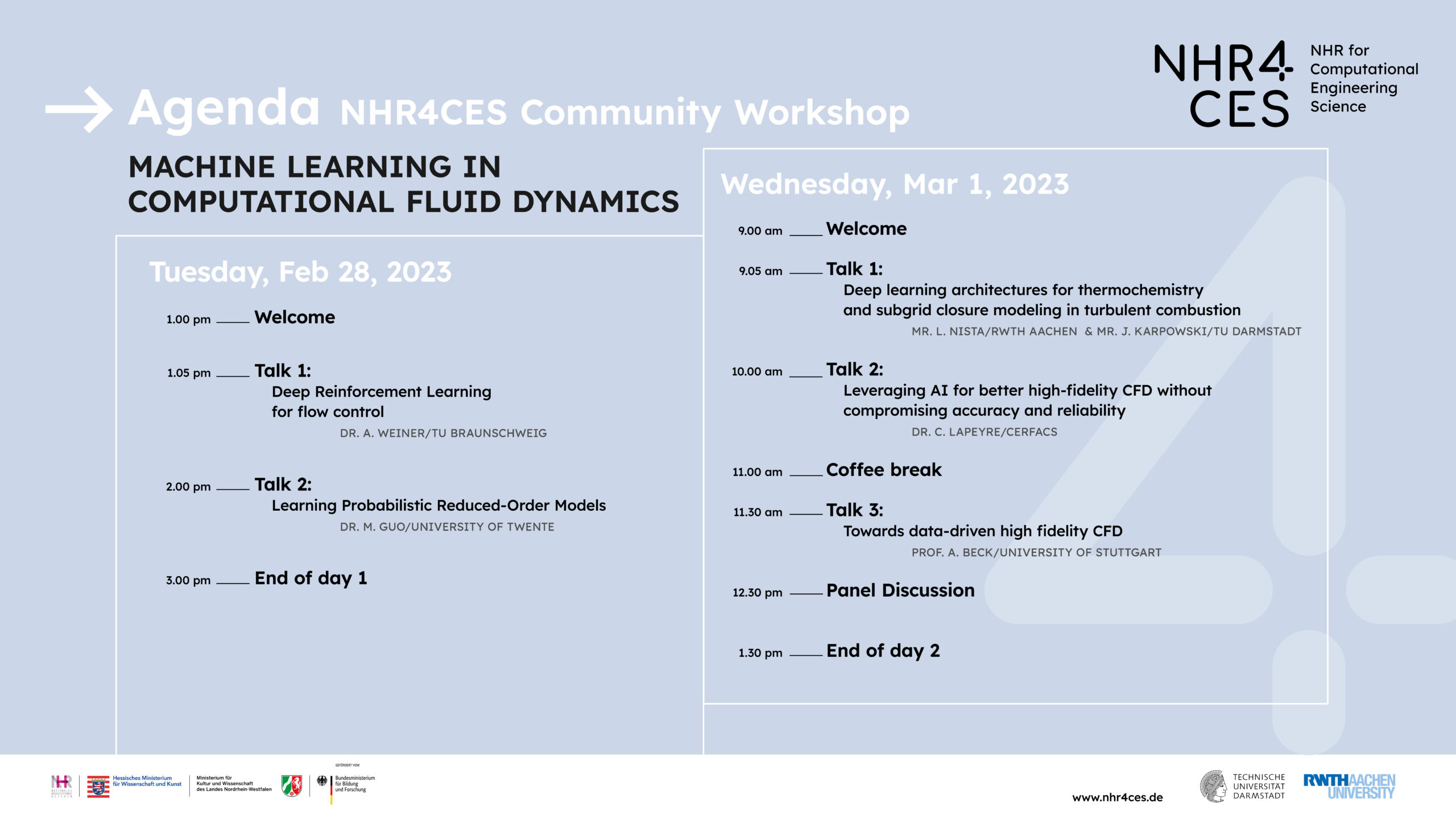

Machine learning in Computational Fluid Dynamics

Big data and Machine Learning (ML) are driving comprehensive economic and social transformations and are rapidly becoming a core technology for scientific computing, with numerous opportunities to advance different research areas such as Computational Fluid Dynamics (CFD).

The combination of CFD with ML has been already applied to several CFD configurations and is a promising research direction with the potential to enable the advancement of so far unsolved problems, thanks to the ability of deep models to learn in a hierarchical manner with little to no need for prior knowledge. However, this approach presents a paradigm shift to change the focus of CFD from time-consuming feature detection to in-depth examinations of relevant features, enabling deeper insight into the physics involved in complex natural processes.

The workshop is designed to highlight some of the areas of the highest potential impact, including improving turbulence and combustion closure modeling, developing reduced-order models, and designing versatile neural network architectures. Emerging ML areas that are promising for CFD, as well as some potential limitations, will be discussed. The workshop aims at gathering different research groups, by providing a venue to exchange new ideas, discuss challenges, and expose this new research field to a broader community.

Dr. Andre Weiner

Deep Reinforcement Learning for Flow Control

The talk provides an introduction to the optimization of closed-loop flow control laws by means of deep reinforcement learning (DRL) in the context of computational fluid dynamics (CFD).

Closed-loop flow control has a wide range of applications like gust mitigation for aircraft or process intensification of chemical plants. However, creating robust control laws that map potentially high-dimensional state spaces to suitable control actions is not straightforward. The core idea behind DRL is the optimization of neural-network-based control laws by trial-and-error learning. A so-called agent applies control actions to an environment, which then transitions to a new state, and returns a reward expressing its intrinsic objective.

DRL in combination with CFD may be employed for early stage optimization of closed-loop control applications, e.g., optimization of sensor positions or actuators. However, CFD-based environments are expensive to evaluate. One strategy to increase the sample efficiency, i.e. to leverage each simulation dataset to its maximum, is model-based DRL. From the aforementioned topics, the talk focuses on optimal sensor placement strategies and discusses several pitfalls and challenges in the implementation of model-based DRL.

Dr. Mengwu Guo

Learning Probabilistic Reduced-Order Models

Efficient and credible multi-query, real-time simulations constitute a critical enabling factor for digital twinning, and data-driven reduced-order modeling is a natural choice for achieving this goal. This talk will discuss several probabilistic options for the learning of reduced-order models, in which a significantly reduced dimensionality of dynamical systems guarantees improved efficiency, and the facilitated uncertainty quantification certifies computational credibility.

The talk will start with reduced-order surrogate modeling using Gaussian process regression. In this scheme, the full-order solvers are used offline merely as a ‘black-box’ for data generation, whereas the online stage is entirely simulation-free, which guarantees reliable and efficient multi-query simulations of large-scale engineering assets. The second method is the Bayesian reduced-order operator inference, a non-intrusive, physics-informed approach that inherits the formulation structure of projection-based reduced-state governing equations yet without requiring access to the full-order solvers. The reduced-order operators are estimated using Bayesian inference. They probabilistically describe a low-dimensional dynamical system for the predominant latent states, and provide a naturally embedded Tikhonov regularization together with a quantification of modeling uncertainties. The third method employs deep kernel learning — a probabilistic deep learning tool that integrates neural networks into manifold Gaussian processes — for the data-driven discovery of low-dimensional latent dynamics from high-dimensional measurements given by noise-corrupted images.

This tool is utilized for both the nonlinear dimensionality reduction and the representation of reduced-order dynamics. Exemplifying applications will be discussed in different contexts of computational engineering and sciences. They probabilistically describe a low-dimensional dynamical system for the predominant latent states, and provide a naturally embedded Tikhonov regularization together with a quantification of modeling uncertainties. The third method employs deep kernel learning — a probabilistic deep learning tool that integrates neural networks into manifold Gaussian processes — for the data-driven discovery of low-dimensional latent dynamics from high-dimensional measurements given by noise-corrupted images. This tool is utilized for both the nonlinear dimensionality reduction and the representation of reduced-order dynamics. Exemplifying applications will be discussed in different contexts of computational engineering and sciences.

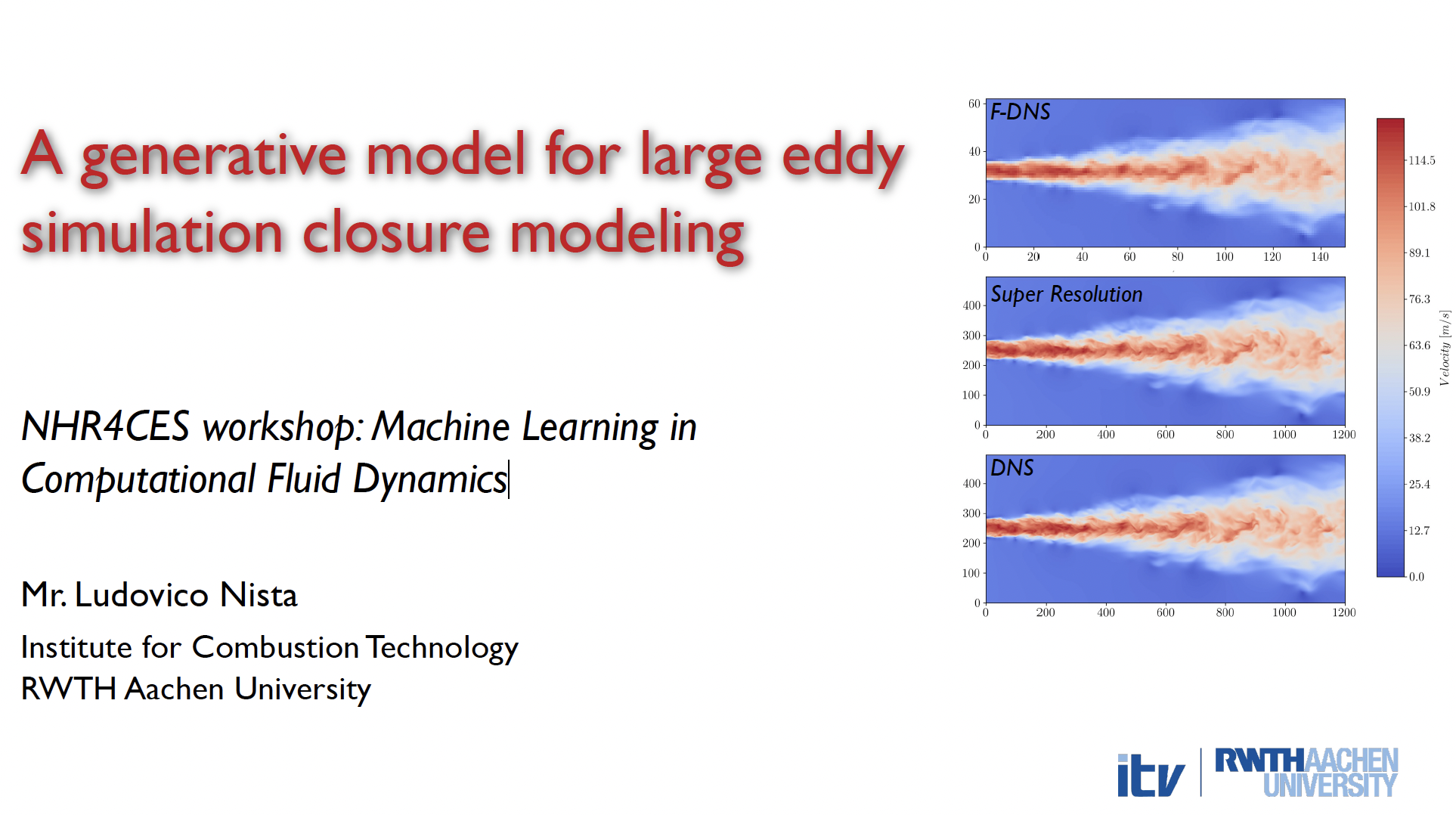

Mr. Jeremy T.P. Karpowski & Mr. Ludovico Nista

Deep learning architectures for thermochemistry and subgrid closure modeling in turbulent combustion

While data-driven methods are utilized in various combustion areas, recent advances in algorithmic developments, the accessibility of open-source software libraries, the availability of computational resources, and the abundance of data have together rendered machine learning techniques ubiquitous in scientific analysis and engineering.

The first part of the talk will focus on the development of an artificial neural network chemistry manifold to simulate a premixed methane-air flame undergoing side-wall quenching. Similar to the tabulation of a Quenching Flamelet-Generated Manifold, the neural network is trained on a one-dimensional head-on quenching flame database to learn the intrinsic chemistry manifold. A-posteiori investigations demonstrated that the chemical source terms must be corrected at the manifold boundaries to ensure the boundedness of the thermo-chemical state at all times. The ANN model is evaluated against widely-used approaches, such as the detailed chemistry and the flamelet-based manifold approximation, on a 2D side-wall quenching configuration. Recently, deep learning frameworks have demonstrated excellent performance in modeling nonlinear

interactions and are thus a promising, novel technique to move beyond physics-based models. The second part of the talk will focus on the development of a physics-informed super-resolution generative adversarial network (PIESRGAN) employed for the unresolved stress and scalar-flux tensors closure modeling. The data-driven model is evaluated a-priori on different configurations and compared against widely-used algebraic models. In this context, the contribution of adversarial training, employed by the GAN architecture, is assessed by comparing it with a classical supervised approach. Finally, a-priori out-of-sample capabilities are investigated, providing insights into the quantities that need to be conserved for the model to perform well between different regimes, and thus representing a crucial step toward future embedding into LES numerical solvers.

Dr. Corentin Lapeyre

Leveraging AI for better high-fidelity CFD without compromising accuracy and reliability

Numerical simulation is a cornerstone of modern physics and engineering, particularly in relation to fluid flows. Indeed, expensive and accurate simulations drive e.g. climate models, forest fire and flood modeling, the design of sustainable energy systems such as solar and wind power, and of systems around new low-emission energy vectors such as hydrogen.

To address these modeling and design challenges at the pace required by rapid climate change, data-driven models can work in tandem with physics-based approaches and enable faster results while maintaining accuracy and reliability.

This talk will showcase some applications of these new approaches, and discuss some promising avenues for more efficient hybrid solvers in the near term.

Prof. Andrea Beck

Towards data-driven high fidelity CFD

This talk will give an overview of recent successes (and some failures)of combining modern, high order discretization schemes of Discontinuous Galerkin (DG) type with machine learning submodels and their applications for large scale computations.

The primary focus will be on supervised learning strategies, where a multivariate, non-linear function approximation of given data sets is found through a high-dimensional, non-convex optimization problem that is efficiently solved on modern GPUs. This approach can thus for example be employed in cases where current submodels in the discretization schemes currently rely on heuristic data. A prime of example of this is shock detection and shock capturing for high order methods, where essentially all known approaches require some expert user knowledge as guid- ing input. As an illustrative example, this talk will show how modern, multiscale neural network architectures originally designed for image segmentation can ameliorate this problem and provide parameter free and grid independent shock front detection on a subelement level. With this information, we can then inform a high order artificial viscosity operator for inner-element shock capturing.

In the second part of the talk, Prof. Beck will present data-driven approaches to LES modeling for implicitly filtered high order discretizations. Wheres supervised learning of the Reynolds force tensor based on non- local data can provide highly accurate results that provide higher a priori correlation than any existing closures, a posteriori stability remains an issue. Prof. Beck will give reasons for this and introduce rein- forcement learning (RL) as an alternative optimization approach. The initial experiments with this method suggest that is it much better suited to account for the uncertainties introduced by the numerical scheme and its induced filter form on the modeling task. For this coupled RL-DG framework, Prof. Beck will present discretization-aware model approaches for the LES equations (c.f. Fig. 1) and discuss the future potential of these solver-in-the-loop optimizations.